[TOC]

0x00 前言简述 描述: 在我博客以及前面的文章之中讲解Kubernetes相关集群环境的搭建, 随着K8S及其相关组件的迭代, 与读者当前接触的版本有所不同,所以在当前【2022年4月26日 10:08:29】时间节点,博主使用ubuntu 20.04 、haproxy、keepalive、containerd、etcd、kubeadm、kubectl 等相关工具插件【最新或者稳定的版本】进行实践高可用的kubernetes集群的搭建,这里不再对k8s等相关基础知识做介绍,如有新入门的童鞋,请访问如下【博客文章】(https://blog.weiyigeek.top/tags/k8s/ ) 或者【B站专栏】(https://www.bilibili.com/read/readlist/rl520875?spm_id_from=333.999.0.0 ) 按照顺序学习。

简述

文章来源: https://mp.weixin.qq.com/s/sYpHENyehAnGOQQakYzhUA 】https://mp.weixin.qq.com/s/-ksiNJG6v4q47ez7_H3uYQ 】

0x01 环境准备 主机规划

主机地址

主机名称

主机配置

主机角色

软件组件

10.10.107.223

master-223

4C/4G/

控制节点

10.10.107.224

master-224

4C/4G

控制节点

10.10.107.225

master-225

4C/8G

控制节点

10.10.107.226

node-1

4C/2G

工作节点

10.10.107.227

node-2

4C/2G

工作节点

10.10.107.222

weiyigeek.cluster.k8s

-

虚拟VIP

虚拟网卡地址

温馨提示: 此处使用的是 Ubuntu 20.04 操作系统, 该系统已做安全加固和内核优化符合等保2.0要求【SecOpsDev/Ubuntu-InitializeSecurity.sh at master · WeiyiGeek/SecOpsDev (github.com)】, 如你的Linux未进行相应配置环境可能与读者有些许差异, 如需要进行(windows server 、Ubuntu、CentOS)安全加固请参照如下加固脚本进行加固, 请大家疯狂的 star 。

加固脚本地址:【 https://github.com/WeiyiGeek/SecOpsDev/blob/master/OS-%E6%93%8D%E4%BD%9C%E7%B3%BB%E7%BB%9F/Linux/Ubuntu/Ubuntu-InitializeSecurity.sh 】

软件版本 操作系统

Ubuntu 20.04 LTS - 5.4.0-107-generic

TLS证书签发

cfssl - v1.6.1

cfssl-certinfo - v1.6.1

cfssljson - v1.6.1

高可用软件

ipvsadm - 1:1.31-1

haproxy - 2.0.13-2

keepalived - 1:2.0.19-2

ETCD数据库

容器运行时

Kubernetes

kubeadm - v1.23.6

kube-apiserver - v1.23.6

kube-controller-manager - v1.23.6

kubectl - v1.23.6

kubelet - v1.23.6

kube-proxy - v1.23.6

kube-scheduler - v1.23.6

网络插件&辅助软件

calico - v3.22

coredns - v1.9.1

kubernetes-dashboard - v2.5.1

k9s - v0.25.18

网络规划

子网 Subnet

网段

备注

nodeSubnet

10.10.107.0/24

C1

ServiceSubnet

10.96.0.0/16

C2

PodSubnet

10.128.0.0/16

C3

温馨提示: 上述环境所使用的到相关软件及插件我已打包, 方便大家进行下载,可访问如下链接(访问密码请访问 WeiyiGeek 公众号回复【k8s二进制】获取)。

下载地址1: http://share.weiyigeek.top/f/36158960-578443238-a1a5fa (访问密码:点击访问 WeiyiGeek 公众号回复【k8s二进制】 )https://download.csdn.net/download/u013072756/85360801

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 /kubernetes-cluster-binary-install . ├── calico │ └── calico-v3.22.yaml ├── certificate │ ├── admin-csr.json │ ├── apiserver-csr.json │ ├── ca-config.json │ ├── ca-csr.json │ ├── cfssl │ ├── cfssl-certinfo │ ├── cfssljson │ ├── controller-manager-csr.json │ ├── etcd-csr.json │ ├── kube-scheduler-csr.json │ ├── proxy-csr.json │ └── scheduler-csr.json ├── containerd.io │ └── config.toml ├── coredns │ ├── coredns.yaml │ ├── coredns.yaml.sed │ └── deploy.sh ├── cri-containerd-cni-1.6.4-linux-amd64.tar.gz ├── etcd-v3.5.4-linux-amd64.tar.gz ├── k9s ├── kubernetes-dashboard │ ├── kubernetes-dashboard.yaml │ └── rbac-dashboard-admin.yaml ├── kubernetes-server-linux-amd64.tar.gz └── nginx.yaml

0x02 安装部署 1.基础主机环境准备配置 步骤 01.【所有主机】主机名设置按照上述主机规划进行设置。1 2 3 4 5 hostnamectl set -hostname master-223 hostnamectl set -hostname node-2

步骤 02.【所有主机】将规划中的主机名称与IP地址进行硬解析。1 2 3 4 5 6 7 8 sudo tee -a /etc/hosts <<'EOF' 10.10.107.223 master-223 10.10.107.224 master-224 10.10.107.225 master-225 10.10.107.226 node-1 10.10.107.227 node-2 10.10.107.222 weiyigeek.cluster.k8s EOF

步骤 03.验证每个节点上IP、MAC 地址和 product_uuid 的唯一性,保证其能相互正常通信1 2 3 4 ifconfig -a sudo cat /sys/class/dmi/id/product_uuid

步骤 04.【所有主机】系统时间同步与时区设置1 2 3 4 5 6 7 date -R sudo ntpdate ntp.aliyun.com sudo timedatectl set -timezone Asia/Shanghai sudo timedatectl set -local-rtc 0 timedatectl

步骤 05.【所有主机】禁用系统交换分区1 2 3 swapoff -a && sed -i 's|^/swap.img|#/swap.ing|g' /etc/fstab free | grep "Swap:"

步骤 06.【所有主机】系统内核参数调整1 2 3 4 5 6 7 8 egrep -q "^(#)?vm.swappiness.*" /etc/sysctl.conf && sed -ri "s|^(#)?vm.swappiness.*|vm.swappiness = 0|g" /etc/sysctl.conf || echo "vm.swappiness = 0" >> /etc/sysctl.conf egrep -q "^(#)?net.ipv4.ip_forward.*" /etc/sysctl.conf && sed -ri "s|^(#)?net.ipv4.ip_forward.*|net.ipv4.ip_forward = 1|g" /etc/sysctl.conf || echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf egrep -q "^(#)?net.bridge.bridge-nf-call-iptables.*" /etc/sysctl.conf && sed -ri "s|^(#)?net.bridge.bridge-nf-call-iptables.*|net.bridge.bridge-nf-call-iptables = 1|g" /etc/sysctl.conf || echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf egrep -q "^(#)?net.bridge.bridge-nf-call-ip6tables.*" /etc/sysctl.conf && sed -ri "s|^(#)?net.bridge.bridge-nf-call-ip6tables.*|net.bridge.bridge-nf-call-ip6tables = 1|g" /etc/sysctl.conf || echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

步骤 07.【所有主机】禁用系统防火墙1 ufw disable && systemctl disable ufw && systemctl stop ufw

步骤 08.【master-225 主机】使用 master-225 主机的公钥免账号密码登陆其它主机(可选)方便文件在各主机上传下载。1 2 3 4 5 6 7 8 9 10 11 12 13 14 sh-keygen -t ed25519 ssh-copy-id -p 20211 weiyigeek@10.10.107.223 ssh-copy-id -p 20211 weiyigeek@10.10.107.224 ssh-copy-id -p 20211 weiyigeek@10.10.107.226 ssh-copy-id -p 20211 weiyigeek@10.10.107.227

2.负载均衡管理工具安装与内核加载 步骤 01.安装ipvs模块以及负载均衡相关依赖。1 2 3 4 5 6 7 8 9 10 sudo apt-cache madison ipvsadm sudo apt -y install ipvsadm ipset sysstat conntrack apt-mark hold ipvsadm

步骤 02.将模块加载到内核中(开机自动设置-需要重启机器生效)1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 tee /etc/modules-load.d/k8s-.conf <<'EOF' br_netfilter overlay nf_conntrack ip_vs ip_vs_lc ip_vs_lblc ip_vs_lblcr ip_vs_rr ip_vs_wrr ip_vs_sh ip_vs_dh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp ip_tables ip_set ipt_set ipt_rpfilter ipt_REJECT ipip xt_set EOF

步骤 03.手动加载模块到内核中1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 mkdir -vp /etc/modules.d/ tee /etc/modules.d/k8s-ipvs.modules <<'EOF' #!/bin/bash modprobe -- br_netfilter modprobe -- overlay modprobe -- nf_conntrack modprobe -- ip_vs modprobe -- ip_vs_lc modprobe -- ip_vs_lblc modprobe -- ip_vs_lblcr modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- ip_vs_dh modprobe -- ip_vs_fo modprobe -- ip_vs_nq modprobe -- ip_vs_sed modprobe -- ip_vs_ftp modprobe -- ip_tables modprobe -- ip_set modprobe -- ipt_set modprobe -- ipt_rpfilter modprobe -- ipt_REJECT modprobe -- ipip modprobe -- xt_set EOF chmod 755 /etc/modules.d/k8s-ipvs.modules && bash /etc/modules.d/k8s-ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack sysctl --system

温馨提示: 在 kernel 4.19 版本及以上将使用 nf_conntrack 模块, 则在 4.18 版本以下则需使用nf_conntrack_ipv4 模块。

3.高可用HAproxy与Keepalived软件安装配置 描述: 由于是测试学习环境, 此处我未专门准备两台HA服务器, 而是直接采用master节点机器,如果是正式环境建议独立出来。

步骤 01.【Master节点机器】安装下载 haproxy (HA代理健康检测) 与 keepalived (虚拟路由协议-主从)。

1 2 3 4 5 6 7 8 9 10 sudo apt-cache madison haproxy keepalived sudo apt -y install haproxy keepalived apt-mark hold haproxy keepalived

步骤 02.【Master节点机器】进行 HAProxy 配置,其配置目录为 /etc/haproxy/,所有节点配置是一致的。1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 sudo cp /etc/haproxy/haproxy.cfg{,.bak} tee /etc/haproxy/haproxy.cfg<<'EOF' global user haproxy group haproxy maxconn 2000 daemon log /dev/log local0 log /dev/log local1 err chroot /var/lib/haproxy stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners stats timeout 30s ca-base /etc/ssl/certs crt-base /etc/ssl/private ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384 ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256 ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets defaults log global mode http option httplog option dontlognull timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-request 15s timeout http-keep-alive 15s frontend k8s-master bind 0.0.0.0:16443 bind 127.0.0.1:16443 mode tcp option tcplog tcp-request inspect-delay 5s default_backend k8s-master backend k8s-master mode tcp option tcplog option tcp-check balance roundrobin default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 server master-223 10.10.107.223:6443 check server master-224 10.10.107.224:6443 check server master-225 10.10.107.225:6443 check EOF

步骤 03.【Master节点机器】进行 置KeepAlived 配置 ,其配置目录为 /etc/haproxy/

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 mkdir -vp /etc/keepalived sudo tee /etc/keepalived/keepalived.conf <<'EOF' ! Configuration File for keepalived global_defs { router_id LVS_DEVEL script_user root enable_script_security } vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state __ROLE__ interface __NETINTERFACE__ mcast_src_ip __IP__ virtual_router_id 51 priority 101 advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { __VIP__ } } EOF sed -i -e 's#__ROLE__#MASTER#g' \ -e 's#__NETINTERFACE__#ens32#g' \ -e 's#__IP__#10.10.107.225#g' \ -e 's#__VIP__#10.10.107.222#g' /etc/keepalived/keepalived.conf sed -i -e 's#__ROLE__#BACKUP#g' \ -e 's#__NETINTERFACE__#ens32#g' \ -e 's#__IP__#10.10.107.224#g' \ -e 's#__VIP__#10.10.107.222#g' /etc/keepalived/keepalived.conf sed -i -e 's#__ROLE__#BACKUP#g' \ -e 's#__NETINTERFACE__#ens32#g' \ -e 's#__IP__#10.10.107.223#g' \ -e 's#__VIP__#10.10.107.222#g' /etc/keepalived/keepalived.conf

温馨提示: 注意上述的健康检查是关闭注释了的,你需要将K8S集群建立完成后再开启。1 2 3 track_script { chk_apiserver }

步骤 04.【Master节点机器】进行配置 KeepAlived 健康检查文件。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 sudo tee /etc/keepalived/check_apiserver.sh <<'EOF' #!/bin/bash err=0 for k in $(seq 1 3)do check_code=$(pgrep haproxy) if [[ $check_code == "" ]]; then err=$(expr $err + 1) sleep 1 continue else err=0 break fi done if [[ $err != "0" ]]; then echo "systemctl stop keepalived" /usr/bin/systemctl stop keepalived exit 1 else exit 0 fi EOF sudo chmod +x /etc/keepalived/check_apiserver.sh

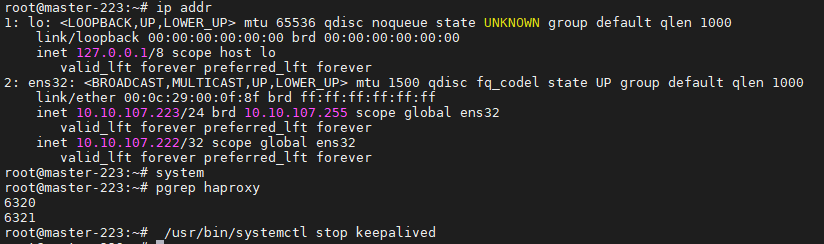

步骤 05.【Master节点机器】启动 haproxy 、keepalived 相关服务及测试VIP漂移。1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 sudo systemctl daemon-reload sudo systemctl enable --now haproxy && sudo systemctl enable --now keepalived root@master-223:~$ ip addr root@master-224:~$ ping 10.10.107.222 root@master-224:~$ ping 10.10.107.222

weiyigeek.top-master-223-vip

1 2 3 4 5 6 7 8 9 10 11 12 13 14 root@master-223:~$ pgrep haproxy root@master-223:~$ /usr/bin/systemctl stop keepalived root@master-225:~$ ip addr show ens32

至此,HAProxy 与 Keepalived 配置就告一段落了,下面将学习 ETCD 集群配置与证书签发。

4.配置部署etcd集群与etcd证书签发 描述: 创建一个高可用的ETCD集群,此处我们在【master-225】机器中操作。

步骤 01.【master-225】创建一个配置与相关文件存放的目录, 以及下载获取cfssl工具进行CA证书制作与签发(cfssl工具往期文章参考地址: https://blog.weiyigeek.top/2019/10-21-12.html#3-CFSSL-生成 )。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 mkdir -vp /app/k8s-init-work && cd /app/k8s-init-work curl -L https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl_1.6.1_linux_amd64 -o /usr/local /bin/cfssl curl -L https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssljson_1.6.1_linux_amd64 -o /usr/local /bin/cfssljson curl -L https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl-certinfo_1.6.1_linux_amd64 -o /usr/local /bin/cfssl-certinfo chmod +x /usr/local /bin/cfssl* /app

温馨提示:

cfssl : CFSSL 命令行工具

cfssljson : 用于从cfssl程序中获取JSON输出并将证书、密钥、证书签名请求文件CSR和Bundle写入到文件中,

步骤 02.利用上述 cfssl 工具创建 CA 证书。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 fssl print -defaults csr > ca-csr.json tee ca-csr.json <<'EOF' { "CN" : "kubernetes" , "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [ { "C" : "CN" , "L" : "ChongQing" , "ST" : "ChongQing" , "O" : "k8s" , "OU" : "System" } ], "ca" : { "expiry" : "87600h" } } EOF CN: Common Name,浏览器使用该字段验证网站是否合法,一般写的是域名,非常重要。浏览器使用该字段验证网站是否合法 key:生成证书的算法 hosts:表示哪些主机名(域名)或者IP可以使用此csr申请的证书,为空或者"" 表示所有的都可以使用(本例中没有`"hosts" : ["" ]`字段) names:常见属性设置 * C: Country, 国家 * ST: State,州或者是省份 * L: Locality Name,地区,城市 * O: Organization Name,组织名称,公司名称(在k8s中常用于指定Group,进行RBAC绑定) * OU: Organization Unit Name,组织单位名称,公司部门 cfssl print -defaults config > ca-config.json tee ca-config.json <<'EOF' { "signing" : { "default" : { "expiry" : "87600h" }, "profiles" : { "kubernetes" : { "expiry" : "87600h" , "usages" : [ "signing" , "key encipherment" , "server auth" , "client auth" ] }, "etcd" : { "expiry" : "87600h" , "usages" : [ "signing" , "key encipherment" , "server auth" , "client auth" ] } } } } EOF default 默认策略,指定了证书的默认有效期是10年(87600h) profile 自定义策略配置 * kubernetes:表示该配置(profile)的用途是为kubernetes生成证书及相关的校验工作 * signing:表示该证书可用于签名其它证书;生成的 ca.pem 证书中 CA=TRUE * server auth:表示可以该CA 对 server 提供的证书进行验证 * client auth:表示可以用该 CA 对 client 提供的证书进行验证 * expiry:也表示过期时间,如果不写以default中的为准 cfssl gencert -initca ca-csr.json | cfssljson -bare ca $ ls $ openssl x509 -in ca.pem -text -noout | grep "Not"

温馨提示: 如果将 expiry 设置为87600h 表示证书过期时间为十年。

步骤 03.配置ETCD证书相关文件以及生成其证书,1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 tee etcd-csr.json <<'EOF' { "CN" : "etcd" , "hosts" : [ "127.0.0.1" , "10.10.107.223" , "10.10.107.224" , "10.10.107.225" , "etcd1" , "etcd2" , "etcd3" ], "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [ { "C" : "CN" , "L" : "ChongQing" , "ST" : "ChongQing" , "O" : "etcd" , "OU" : "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=etcd etcd-csr.json | cfssljson -bare etcd $ ls etcd* etcd.csr etcd-csr.json etcd-key.pem etcd.pem $ openssl x509 -in etcd.pem -text -noout | grep "X509v3 Subject Alternative Name" -A 1

步骤 04.【所有Master节点主机】下载部署ETCD集群, 首先我们需要下载etcd软件包, 可以 Github release 找到最新版本的etcd下载路径(https://github.com/etcd-io/etcd/releases/)。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 wget -L https://github.com/etcd-io/etcd/releases/download/v3.5.4/etcd-v3.5.4-linux-amd64.tar.gz tar -zxvf etcd-v3.5.4-linux-amd64.tar.gz cp -a etcd* /usr/local /bin/ etcd --version scp -P 20211 ./etcd-v3.5.4-linux-amd64.tar.gz weiyigeek@master-223:~ scp -P 20211 ./etcd-v3.5.4-linux-amd64.tar.gz weiyigeek@master-224:~ tar -zxvf /home/weiyigeek/etcd-v3.5.4-linux-amd64.tar.gz -C /usr/local / cp -a /usr/local /etcd-v3.5.4-linux-amd64/etcd* /usr/local /bin/

温馨提示: etcd 官网地址 ( https://etcd.io/ )

步骤 05.创建etcd集群所需的配置文件。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 mkdir -vp /etc/etcd/pki/ cp *.pem /etc/etcd/pki/ ls /etc/etcd/pki/ scp -P 20211 *.pem weiyigeek@master-224:~ scp -P 20211 *.pem weiyigeek@master-223:~ tee /etc/etcd/etcd.conf <<'EOF' ETCD_NAME=etcd1 ETCD_DATA_DIR="/var/lib/etcd/data" ETCD_LISTEN_CLIENT_URLS="https://10.10.107.225:2379,https://127.0.0.1:2379" ETCD_LISTEN_PEER_URLS="https://10.10.107.225:2380" ETCD_ADVERTISE_CLIENT_URLS="https://10.10.107.225:2379" ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.10.107.225:2380" ETCD_INITIAL_CLUSTER="etcd1=https://10.10.107.225:2380,etcd2=https://10.10.107.224:2380,etcd3=https://10.10.107.223:2380" ETCD_INITIAL_CLUSTER_STATE=new EOF tee /etc/etcd/etcd.conf <<'EOF' ETCD_NAME=etcd2 ETCD_DATA_DIR="/var/lib/etcd/data" ETCD_LISTEN_CLIENT_URLS="https://10.10.107.224:2379,https://127.0.0.1:2379" ETCD_LISTEN_PEER_URLS="https://10.10.107.224:2380" ETCD_ADVERTISE_CLIENT_URLS="https://10.10.107.224:2379" ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.10.107.224:2380" ETCD_INITIAL_CLUSTER="etcd1=https://10.10.107.225:2380,etcd2=https://10.10.107.224:2380,etcd3=https://10.10.107.223:2380" ETCD_INITIAL_CLUSTER_STATE=new EOF tee /etc/etcd/etcd.conf <<'EOF' ETCD_NAME=etcd3 ETCD_DATA_DIR="/var/lib/etcd/data" ETCD_LISTEN_CLIENT_URLS="https://10.10.107.223:2379,https://127.0.0.1:2379" ETCD_LISTEN_PEER_URLS="https://10.10.107.223:2380" ETCD_ADVERTISE_CLIENT_URLS="https://10.10.107.223:2379" ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.10.107.223:2380" ETCD_INITIAL_CLUSTER="etcd1=https://10.10.107.225:2380,etcd2=https://10.10.107.224:2380,etcd3=https://10.10.107.223:2380" ETCD_INITIAL_CLUSTER_STATE=new EOF

步骤 06.【所有Master节点主机】创建配置 etcd 的 systemd 管理配置文件,并启动其服务。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 mkdir -vp /var/lib/etcd/ cat > /usr/lib/systemd/system/etcd.service <<EOF [Unit] Description=Etcd Server Documentation=https://github.com/etcd-io/etcd After=network.target After=network-online.target wants=network-online.target [Service] Type=notify WorkingDirectory=/var/lib/etcd/ EnvironmentFile=-/etc/etcd/etcd.conf ExecStart=/usr/local /bin/etcd \ --client-cert-auth \ --trusted-ca-file /etc/etcd/pki/ca.pem \ --cert-file /etc/etcd/pki/etcd.pem \ --key-file /etc/etcd/pki/etcd-key.pem \ --peer-client-cert-auth \ --peer-trusted-ca-file /etc/etcd/pki/ca.pem \ --peer-cert-file /etc/etcd/pki/etcd.pem \ --peer-key-file /etc/etcd/pki/etcd-key.pem Restart=on-failure RestartSec=5 LimitNOFILE=65535 LimitNPROC=65535 [Install] WantedBy=multi-user.target EOF systemctl daemon-reload && systemctl enable --now etcd.service

步骤 07.【所有Master节点主机】查看各个master节点的etcd集群服务是否正常及其健康状态。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 systemctl status etcd.service export ETCDCTL_API=3etcdctl --endpoints=https://10.10.107.225:2379,https://10.10.107.224:2379,https://10.10.107.223:2379 \ --cacert="/etc/etcd/pki/ca.pem" --cert="/etc/etcd/pki/etcd.pem" --key="/etc/etcd/pki/etcd-key.pem" \ --write-out=table member list etcdctl --endpoints=https://10.10.107.225:2379,https://10.10.107.224:2379,https://10.10.107.223:2379 \ --cacert="/etc/etcd/pki/ca.pem" --cert="/etc/etcd/pki/etcd.pem" --key="/etc/etcd/pki/etcd-key.pem" \ --write-out=table endpoint status etcdctl --endpoints=https://10.10.107.225:2379,https://10.10.107.224:2379,https://10.10.107.223:2379 \ --cacert="/etc/etcd/pki/ca.pem" --cert="/etc/etcd/pki/etcd.pem" --key="/etc/etcd/pki/etcd-key.pem" \ --write-out=table endpoint health etcdctl --endpoints=https://10.10.107.225:2379,https://10.10.107.224:2379,https://10.10.107.223:2379 \ --cacert="/etc/etcd/pki/ca.pem" --cert="/etc/etcd/pki/etcd.pem" --key="/etc/etcd/pki/etcd-key.pem" \ --write-out=tableendpoint check perf

5.Containerd 运行时安装部署 步骤 01.【所有节点】在各主机中安装二进制版本的 containerd.io 运行时服务,Kubernertes 通过 CRI 插件来连接 containerd 服务中, 控制容器的生命周期。

1 2 3 4 5 6 wget -L https://github.com/containerd/containerd/releases/download/v1.6.4/cri-containerd-cni-1.6.4-linux-amd64.tar.gz mkdir -vp cri-containerd-cni tar -zxvf cri-containerd-cni-1.6.4-linux-amd64.tar.gz -C cri-containerd-cni

步骤 02.查看其文件以及配置文件路径信息。1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 $ tree ./cri-containerd-cni/ . ├── etc │ ├── cni │ │ └── net.d │ │ └── 10-containerd-net.conflist │ ├── crictl.yaml │ └── systemd │ └── system │ └── containerd.service ├── opt │ ├── cni │ │ └── bin │ │ ├── bandwidth │ │ ├── bridge │ │ ├── dhcp │ │ ├── firewall │ │ ├── host-device │ │ ├── host-local │ │ ├── ipvlan │ │ ├── loopback │ │ ├── macvlan │ │ ├── portmap │ │ ├── ptp │ │ ├── sbr │ │ ├── static │ │ ├── tuning │ │ ├── vlan │ │ └── vrf │ └── containerd │ └── cluster │ ├── gce │ │ ├── cloud-init │ │ │ ├── master.yaml │ │ │ └── node.yaml │ │ ├── cni.template │ │ ├── configure.sh │ │ └── env │ └── version └── usr └── local ├── bin │ ├── containerd │ ├── containerd-shim │ ├── containerd-shim-runc-v1 │ ├── containerd-shim-runc-v2 │ ├── containerd-stress │ ├── crictl │ ├── critest │ ├── ctd-decoder │ └── ctr └── sbin └── runc cd ./cri-containerd-cni/cp -r etc/ / cp -r opt/ / cp -r usr/ /

步骤 03.【所有节点】进行containerd 配置创建并修改 config.toml .1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 mkdir -vp /etc/containerd containerd config default >/etc/containerd/config.toml ls /etc/containerd/config.toml sed -i "s#k8s.gcr.io/pause#registry.cn-hangzhou.aliyuncs.com/google_containers/pause#g" /etc/containerd/config.toml sed -i 's#SystemdCgroup = false#SystemdCgroup = true#g' /etc/containerd/config.toml sed -i '/registry.mirrors]/a\ \ \ \ \ \ \ \ [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]' /etc/containerd/config.toml sed -i '/registry.mirrors."docker.io"]/a\ \ \ \ \ \ \ \ \ \ endpoint = ["https://xlx9erfu.mirror.aliyuncs.com","https://docker.mirrors.ustc.edu.cn"]' /etc/containerd/config.toml sed -i '/registry.mirrors]/a\ \ \ \ \ \ \ \ [plugins."io.containerd.grpc.v1.cri".registry.mirrors."gcr.io"]' /etc/containerd/config.toml sed -i '/registry.mirrors."gcr.io"]/a\ \ \ \ \ \ \ \ \ \ endpoint = ["https://gcr.mirrors.ustc.edu.cn"]' /etc/containerd/config.toml sed -i '/registry.mirrors]/a\ \ \ \ \ \ \ \ [plugins."io.containerd.grpc.v1.cri".registry.mirrors."k8s.gcr.io"]' /etc/containerd/config.toml sed -i '/registry.mirrors."k8s.gcr.io"]/a\ \ \ \ \ \ \ \ \ \ endpoint = ["https://gcr.mirrors.ustc.edu.cn/google-containers/","https://registry.cn-hangzhou.aliyuncs.com/google_containers/"]' /etc/containerd/config.toml sed -i '/registry.mirrors]/a\ \ \ \ \ \ \ \ [plugins."io.containerd.grpc.v1.cri".registry.mirrors."quay.io"]' /etc/containerd/config.toml sed -i '/registry.mirrors."quay.io"]/a\ \ \ \ \ \ \ \ \ \ endpoint = ["https://quay.mirrors.ustc.edu.cn"]' /etc/containerd/config.toml

步骤 04.客户端工具 runtime 与 镜像 端点配置:1 2 3 4 5 6 7 8 9 10 11 cat <<EOF > /etc/crictl.yaml runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock timeout: 10 debug: false EOF

步骤 05.重载 systemd自启和启动containerd.io服务。1 2 3 4 5 6 7 8 9 10 11 12 systemctl daemon-reload && systemctl enable --now containerd.service systemctl status containerd.service ctr version

步骤 06.用于根据OCI规范生成和运行容器的CLI工具 runc 版本查看

温馨提示: 当默认 runc 执行提示 runc: symbol lookup error: runc: undefined symbol: seccomp_notify_respond 时,由于上述软件包中包含的runc对系统依赖过多,所以建议单独下载安装 runc 二进制项目(https://github.com/opencontainers/runc/ )1 2 3 4 5 6 7 wget https://github.com/opencontainers/runc/releases/download/v1.1.1/runc.amd64 chmod +x runc.amd64 mv runc.amd64 /usr/local /sbin/runc

6.Kubernetes 集群安装部署 1) 二进制软件包下载安装 (手动-此处以该实践为例) 步骤 01.【master-225】手动从k8s.io下载Kubernetes软件包并解压安装, 当前最新版本为 v1.23.6 。1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 wget -L https://dl.k8s.io/v1.23.6/kubernetes-server-linux-amd64.tar.gz /app/k8s-init-work/tools /app/k8s-init-work/tools/kubernetes/server/bin cp kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubeadm kubectl /usr/local /bin kubectl version

步骤 02.【master-225】利用scp将kubernetes相关软件包分发到其它机器中。1 2 3 4 5 6 7 scp -P 20211 kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubeadm kubectl weiyigeek@master-223:/tmp scp -P 20211 kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubeadm kubectl weiyigeek@master-224:/tmp scp -P 20211 kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubeadm kubectl weiyigeek@node-1:/tmp scp -P 20211 kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubeadm kubectl weiyigeek@node-2:/tmp cp kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubeadm kubectl /usr/local /bin/

步骤 03.【所有节点】在集群所有节点上创建准备如下目录1 mkdir -vp /etc/kubernetes/{manifests,pki,ssl,cfg} /var/log /kubernetes /var/lib/kubelet

2) 部署配置 kube-apiserver 描述: 它是集群所有服务请求访问的入口点, 通过 API 接口操作集群中的资源。

步骤 01.【master-225】创建apiserver证书请求文件并使用上一章生成的CA签发证书。1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 tee apiserver-csr.json <<'EOF' { "CN" : "kubernetes" , "hosts" : [ "127.0.0.1" , "10.10.107.223" , "10.10.107.224" , "10.10.107.225" , "10.10.107.222" , "10.96.0.1" , "weiyigeek.cluster.k8s" , "master-223" , "master-224" , "master-225" , "kubernetes" , "kubernetes.default.svc" , "kubernetes.default.svc.cluster" , "kubernetes.default.svc.cluster.local" ], "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [ { "C" : "CN" , "L" : "ChongQing" , "ST" : "ChongQing" , "O" : "k8s" , "OU" : "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes apiserver-csr.json | cfssljson -bare apiserver $ ls apiserver* apiserver.csr apiserver-csr.json apiserver-key.pem apiserver.pem cp *.pem /etc/kubernetes/ssl/

步骤 02.【master-225】创建TLS机制所需TOKEN1 2 3 cat > /etc/kubernetes/bootstrap-token.csv << EOF $(head -c 16 /dev/urandom | od -An -t x | tr -d ' ' ),kubelet-bootstrap,10001,"system:bootstrappers" EOF

温馨提示: 启用 TLS Bootstrapping 机制Node节点kubelet和kube-proxy要与kube-apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。

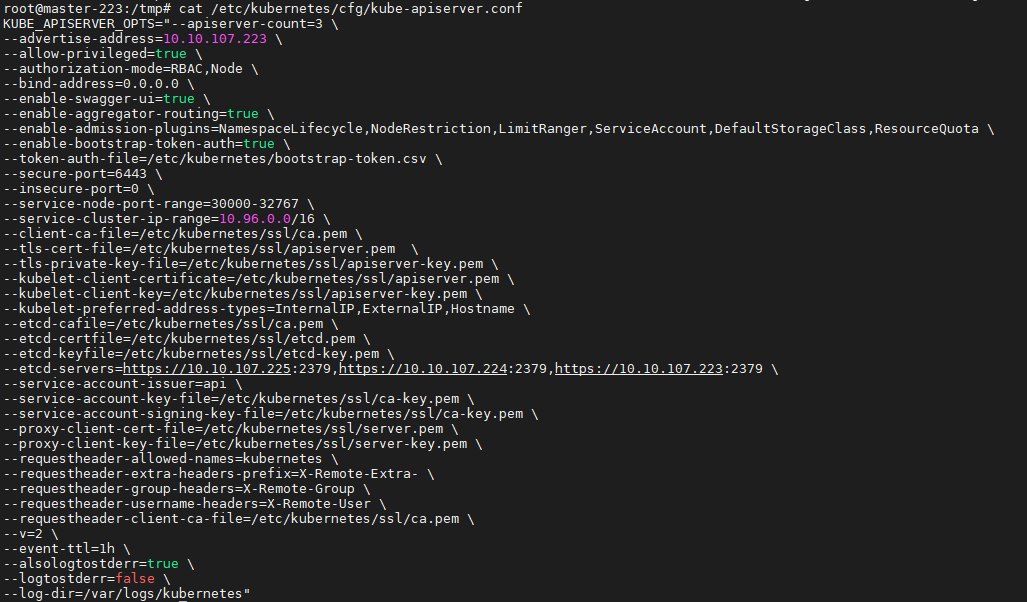

步骤 03.【master-225】创建 kube-apiserver 配置文件1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 cat > /etc/kubernetes/cfg/kube-apiserver.conf <<'EOF' KUBE_APISERVER_OPTS="--apiserver-count=3 \ --advertise-address=10.10.107.225 \ --allow-privileged=true \ --authorization-mode=RBAC,Node \ --bind-address=0.0.0.0 \ --enable-aggregator-routing=true \ --enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \ --enable-bootstrap-token-auth=true \ --token-auth-file=/etc/kubernetes/bootstrap-token.csv \ --secure-port=6443 \ --service-node-port-range=30000-32767 \ --service-cluster-ip-range=10.96.0.0/16 \ --client-ca-file=/etc/kubernetes/ssl/ca.pem \ --tls-cert-file=/etc/kubernetes/ssl/apiserver.pem \ --tls-private-key-file=/etc/kubernetes/ssl/apiserver-key.pem \ --kubelet-client-certificate=/etc/kubernetes/ssl/apiserver.pem \ --kubelet-client-key=/etc/kubernetes/ssl/apiserver-key.pem \ --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \ --etcd-cafile=/etc/kubernetes/ssl/ca.pem \ --etcd-certfile=/etc/kubernetes/ssl/etcd.pem \ --etcd-keyfile=/etc/kubernetes/ssl/etcd-key.pem \ --etcd-servers=https://10.10.107.225:2379,https://10.10.107.224:2379,https://10.10.107.223:2379 \ --service-account-issuer=https://kubernetes.default.svc.cluster.local \ --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \ --service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \ --proxy-client-cert-file=/etc/kubernetes/ssl/apiserver.pem \ --proxy-client-key-file=/etc/kubernetes/ssl/apiserver-key.pem \ --requestheader-allowed-names=kubernetes \ --requestheader-extra-headers-prefix=X-Remote-Extra- \ --requestheader-group-headers=X-Remote-Group \ --requestheader-username-headers=X-Remote-User \ --requestheader-client-ca-file=/etc/kubernetes/ssl/ca.pem \ --v=2 \ --event-ttl=1h \ --feature-gates=TTLAfterFinished=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes" EOF Flag --enable -swagger-ui has been deprecated, Flag --insecure-port has been deprecated, Flag --alsologtostderr has been deprecated, Flag --logtostderr has been deprecated, will be removed in a future release, Flag --log -dir has been deprecated, will be removed in a future release, Flag -- TTLAfterFinished=true . It will be removed in a future release. (还可使用)

步骤 04.【master-225】创建kube-apiserver服务管理配置文件1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 cat > /lib/systemd/system/kube-apiserver.service << "EOF" [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes After=etcd.service Wants=etcd.service [Service] EnvironmentFile=-/etc/kubernetes/cfg/kube-apiserver.conf ExecStart=/usr/local /bin/kube-apiserver $KUBE_APISERVER_OPTS Restart=on-failure RestartSec=5 Type=notify LimitNOFILE=65536 LimitNPROC=65535 [Install] WantedBy=multi-user.target EOF

步骤 05.将上述创建生成的文件目录同步到集群的其它master节点上.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 tree /etc/kubernetes/ /etc/kubernetes/ ├── bootstrap-token.csv ├── cfg │ └── kube-apiserver.conf ├── manifests ├── pki └── ssl ├── apiserver-key.pem ├── apiserver.pem ├── ca-key.pem ├── ca.pem ├── etcd-key.pem └── etcd.pem scp -P 20211 -R /etc/kubernetes/ weiyigeek@master-223:/tmp scp -P 20211 -R /etc/kubernetes/ weiyigeek@master-224:/tmp scp -P 20211 /lib/systemd/system/kube-apiserver.service weiyigeek@master-223:/tmp scp -P 20211 /lib/systemd/system/kube-apiserver.service weiyigeek@master-224:/tmp cd /tmp/ && cp -r /tmp/kubernetes/ /etc/mv kube-apiserver.service /lib/systemd/system/kube-apiserver.service mv kube-apiserver kubectl kube-proxy kube-scheduler kubeadm kube-controller-manager kubelet /usr/local /bin

步骤 06.分别修改 /etc/kubernetes/cfg/kube-apiserver.conf 文件中 --advertise-address=10.10.107.2251 2 3 4 sed -i 's#--advertise-address=10.10.107.225#--advertise-address=10.10.107.223#g' /etc/kubernetes/cfg/kube-apiserver.conf sed -i 's#--advertise-address=10.10.107.225#--advertise-address=10.10.107.224#g' /etc/kubernetes/cfg/kube-apiserver.conf

weiyigeek.top-kube-apiserver.conf

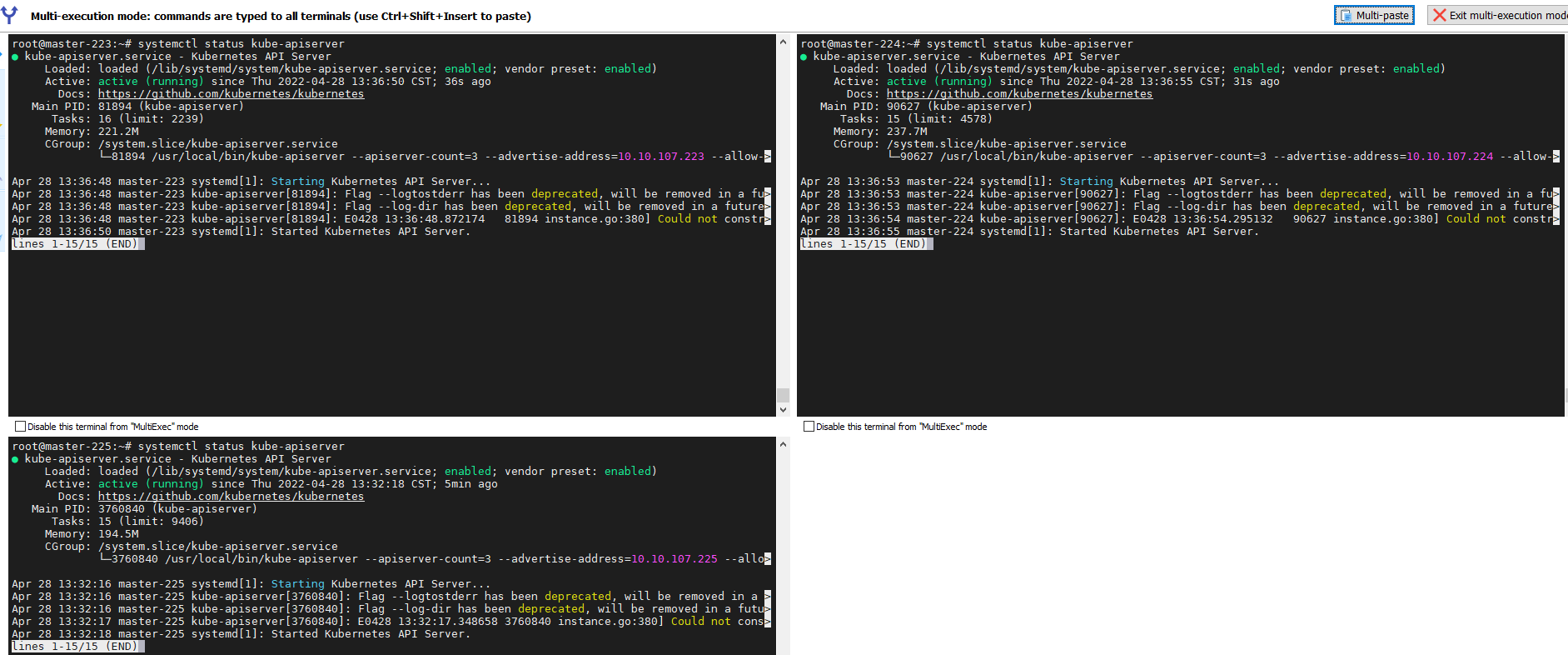

步骤 07.【master节点】完成上述操作后分别在三台master节点上启动apiserver服务。1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 systemctl daemon-reload systemctl enable --now kube-apiserver systemctl status kube-apiserver curl --insecure https://10.10.107.222:16443/ curl --insecure https://10.10.107.223:6443/ curl --insecure https://10.10.107.224:6443/ curl --insecure https://10.10.107.225:6443/ { "kind" : "Status" , "apiVersion" : "v1" , "metadata" : {}, "status" : "Failure" , "message" : "forbidden: User \"system:anonymous\" cannot get path \"/\"" , "reason" : "Forbidden" , "details" : {}, "code" : 403 }

weiyigeek.top-三台master节点apiserver服务状态

至此完毕!

3) 部署配置 kubectl 描述: 它是集群管理客户端工具,与 API-Server 服务请求交互, 实现资源的查看与管理。

步骤 01.【master-225】创建kubectl证书请求文件CSR并生成证书1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 tee admin-csr.json <<'EOF' { "CN" : "admin" , "hosts" : [], "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [ { "C" : "CN" , "L" : "ChongQing" , "ST" : "ChongQing" , "O" : "system:masters" , "OU" : "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin ls admin* cp admin*.pem /etc/kubernetes/ssl/

步骤 02.【master-225】生成kubeconfig配置文件 admin.conf 为 kubectl 的配置文件,包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 cd /etc/kubernetes kubectl config set -cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://10.10.107.222:16443 --kubeconfig=admin.conf kubectl config set -credentials admin --client-certificate=/etc/kubernetes/ssl/admin.pem --client-key=/etc/kubernetes/ssl/admin-key.pem --embed-certs=true --kubeconfig=admin.conf kubectl config set -context kubernetes --cluster=kubernetes --user=admin --kubeconfig=admin.conf kubectl config use-context kubernetes --kubeconfig=admin.conf

步骤 03.【master-225】准备kubectl配置文件并进行角色绑定。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 mkdir /root/.kube && cp /etc/kubernetes/admin.conf ~/.kube/config kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes --kubeconfig=/root/.kube/config $ cat /root/.kube/config apiVersion: v1 clusters: - cluster: certificate-authority-data: base64(CA证书) server: https://10.10.107.222:16443 name: kubernetes contexts: - context: cluster: kubernetes user: admin name: kubernetes current-context: kubernetes kind: Config preferences: {} users: - name: admin user: client-certificate-data: base64(用户证书) client-key-data: base64(用户证书KEY)

步骤 04.【master-225】查看集群状态

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 export KUBECONFIG=$HOME /.kube/configkubectl cluster-info kubectl get componentstatuses kubectl get all --all-namespaces kubectl get ns

温馨提示: 由于我们还未进行 controller-manager 与 scheduler 部署所以此时其状态为 Unhealthy。

步骤 05.【master-225】同步kubectl配置文件到集群其它master节点

1 2 3 4 5 6 7 8 9 10 ssh -p 20211 weiyigeek@master-223 'mkdir ~/.kube/' ssh -p 20211 weiyigeek@master-224 'mkdir ~/.kube/' scp -P 20211 $HOME /.kube/config weiyigeek@master-223:~/.kube/ scp -P 20211 $HOME /.kube/config weiyigeek@master-224:~/.kube/ weiyigeek@master-223:~$ kubectl get services

步骤 06.配置 kubectl 命令补全 (建议新手勿用,等待后期熟悉相关命令后使用)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 apt install -y bash-completion source /usr/share/bash-completion/bash_completionsource <(kubectl completion bash)kubectl completion bash > ~/.kube/completion.bash.inc source ~/.kube/completion.bash.inctee $HOME /.bash_profile <<'EOF' source ~/.kube/completion.bash.incEOF

至此 kubectl 集群客户端配置 完毕.

4) 部署配置 kube-controller-manager 描述: 它是集群中的控制器组件,其内部包含多个控制器资源, 实现对象的自动化控制中心。

步骤 01.【master-225】创建 kube-controller-manager 证书请求文件CSR并生成证书1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 tee controller-manager-csr.json <<'EOF' { "CN" : "system:kube-controller-manager" , "hosts" : [ "127.0.0.1" , "10.10.107.223" , "10.10.107.224" , "10.10.107.225" ], "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [ { "C" : "CN" , "L" : "ChongQing" , "ST" : "ChongQing" , "O" : "system:kube-controller-manager" , "OU" : "System" } ] } EOF * hosts 列表包含所有 kube-controller-manager 节点 IP; * CN 为 system:kube-controller-manager; * O 为 system:kube-controller-manager,kubernetes 内置的 ClusterRoleBindings system:kube-controller-manager 赋予 kube-controller-manager 工作所需的权限 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes controller-manager-csr.json | cfssljson -bare controller-manager $ ls controller* controller-manager.csr controller-manager-csr.json controller-manager-key.pem controller-manager.pem cp controller* /etc/kubernetes/ssl

步骤 02.创建 kube-controller-manager 的 controller-manager.conf 配置文件.1 2 3 4 5 6 7 8 9 10 11 12 13 cd /etc/kubernetes/kubectl config set -cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://10.10.107.222:16443 --kubeconfig=controller-manager.conf kubectl config set -credentials system:kube-controller-manager --client-certificate=/etc/kubernetes/ssl/controller-manager.pem --client-key=/etc/kubernetes/ssl/controller-manager-key.pem --embed-certs=true --kubeconfig=controller-manager.conf kubectl config set -context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=controller-manager.conf kubectl config use-context system:kube-controller-manager --kubeconfig=controller-manager.conf

步骤 03.准备创建 kube-controller-manager 配置文件。1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 cat > /etc/kubernetes/cfg/kube-controller-manager.conf << "EOF" KUBE_CONTROLLER_MANAGER_OPTS="--allocate-node-cidrs=true \ --bind-address=127.0.0.1 \ --secure-port=10257 \ --authentication-kubeconfig=/etc/kubernetes/controller-manager.conf \ --authorization-kubeconfig=/etc/kubernetes/controller-manager.conf \ --cluster-name=kubernetes \ --client-ca-file=/etc/kubernetes/ssl/ca.pem \ --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \ --controllers=*,bootstrapsigner,tokencleaner \ --cluster-cidr=10.128.0.0/16 \ --service-cluster-ip-range=10.96.0.0/16 \ --use-service-account-credentials=true \ --root-ca-file=/etc/kubernetes/ssl/ca.pem \ --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \ --tls-cert-file=/etc/kubernetes/ssl/controller-manager.pem \ --tls-private-key-file=/etc/kubernetes/ssl/controller-manager-key.pem \ --leader-elect=true \ --cluster-signing-duration=87600h \ --v=2 \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --kubeconfig=/etc/kubernetes/controller-manager.conf EOF # 温馨提示: Flag --logtostderr has been deprecated, will be removed in a future release, Flag --log-dir has been deprecated, will be removed in a future release --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

步骤 04.创建 kube-controller-manager 服务启动文件1 2 3 4 5 6 7 8 9 10 11 12 13 14 cat > /lib/systemd/system/kube-controller-manager.service << "EOF" [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/etc/kubernetes/cfg/kube-controller-manager.conf ExecStart=/usr/local /bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF

步骤 05.【master-225】同样分发上述文件到其它master节点中。1 2 3 4 5 6 7 8 9 scp -P 20211 /etc/kubernetes/ssl/controller-manager.pem /etc/kubernetes/ssl/controller-manager-key.pem /etc/kubernetes/controller-manager.conf /etc/kubernetes/cfg/kube-controller-manager.conf /lib/systemd/system/kube-controller-manager.service weiyigeek@master-223:/tmp scp -P 20211 /etc/kubernetes/ssl/controller-manager.pem /etc/kubernetes/ssl/controller-manager-key.pem /etc/kubernetes/controller-manager.conf /etc/kubernetes/cfg/kube-controller-manager.conf /lib/systemd/system/kube-controller-manager.service weiyigeek@master-224:/tmp mv controller-manager*.pem /etc/kubernetes/ssl/ mv controller-manager.conf /etc/kubernetes/controller-manager.conf mv kube-controller-manager.conf /etc/kubernetes/cfg/kube-controller-manager.conf mv kube-controller-manager.service /lib/systemd/system/kube-controller-manager.service

步骤 06.重载 systemd 以及自动启用 kube-controller-manager 服务。1 2 3 systemctl daemon-reload systemctl enable --now kube-controller-manager systemctl status kube-controller-manager

步骤 07.启动运行 kube-controller-manager 服务后查看集群组件状态, 发现原本 controller-manager 是 Unhealthy 的状态已经变成了 Healthy 状态。1 2 3 4 5 6 7 kubectl get componentstatu

至此 kube-controller-manager 服务的安装、配置完毕!

5) 部署配置 kube-scheduler 描述: 在集群中kube-scheduler调度器组件, 负责任务调度选择合适的节点进行分配任务.

步骤 01.【master-225】创建 kube-scheduler 证书请求文件CSR并生成证书1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 tee scheduler-csr.json <<'EOF' { "CN" : "system:kube-scheduler" , "hosts" : [ "127.0.0.1" , "10.10.107.223" , "10.10.107.224" , "10.10.107.225" ], "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [ { "C" : "CN" , "L" : "ChongQing" , "ST" : "ChongQing" , "O" : "system:kube-scheduler" , "OU" : "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes scheduler-csr.json | cfssljson -bare scheduler $ ls scheduler* scheduler-csr.json scheduler.csr scheduler-key.pem scheduler.pem cp scheduler*.pem /etc/kubernetes/ssl

步骤 02.完成后我们需要创建 kube-scheduler 的 kubeconfig 配置文件。

1 2 3 4 5 6 7 8 9 10 11 12 13 cd /etc/kubernetes/kubectl config set -cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://10.10.107.222:16443 --kubeconfig=scheduler.conf kubectl config set -credentials system:kube-scheduler --client-certificate=/etc/kubernetes/ssl/scheduler.pem --client-key=/etc/kubernetes/ssl/scheduler-key.pem --embed-certs=true --kubeconfig=scheduler.conf kubectl config set -context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=scheduler.conf kubectl config use-context system:kube-scheduler --kubeconfig=scheduler.conf

步骤 03.创建 kube-scheduler 服务配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 cat > /etc/kubernetes/cfg/kube-scheduler.conf << "EOF" KUBE_SCHEDULER_OPTS="--address=127.0.0.1 \ --secure-port=10259 \ --kubeconfig=/etc/kubernetes/scheduler.conf \ --authentication-kubeconfig=/etc/kubernetes/scheduler.conf \ --authorization-kubeconfig=/etc/kubernetes/scheduler.conf \ --client-ca-file=/etc/kubernetes/ssl/ca.pem \ --tls-cert-file=/etc/kubernetes/ssl/scheduler.pem \ --tls-private-key-file=/etc/kubernetes/ssl/scheduler-key.pem \ --leader-elect=true \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=2" EOF

步骤 04.创建 kube-scheduler 服务启动配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 cat > /lib/systemd/system/kube-scheduler.service << "EOF" [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/etc/kubernetes/cfg/kube-scheduler.conf ExecStart=/usr/local /bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF

步骤 05.【master-225】同样分发上述文件到其它master节点中。1 2 3 4 5 6 7 8 9 scp -P 20211 /etc/kubernetes/ssl/scheduler.pem /etc/kubernetes/ssl/scheduler-key.pem /etc/kubernetes/scheduler.conf /etc/kubernetes/cfg/kube-scheduler.conf /lib/systemd/system/kube-scheduler.service weiyigeek@master-223:/tmp scp -P 20211 /etc/kubernetes/ssl/scheduler.pem /etc/kubernetes/ssl/scheduler-key.pem /etc/kubernetes/scheduler.conf /etc/kubernetes/cfg/kube-scheduler.conf /lib/systemd/system/kube-scheduler.service weiyigeek@master-224:/tmp mv scheduler*.pem /etc/kubernetes/ssl/ mv scheduler.conf /etc/kubernetes/scheduler.conf mv kube-scheduler.conf /etc/kubernetes/cfg/kube-scheduler.conf mv kube-scheduler.service /lib/systemd/system/kube-scheduler.service

步骤 06.【所有master节点】重载 systemd 以及自动启用 kube-scheduler 服务。1 2 3 systemctl daemon-reload systemctl enable --now kube-scheduler systemctl status kube-scheduler

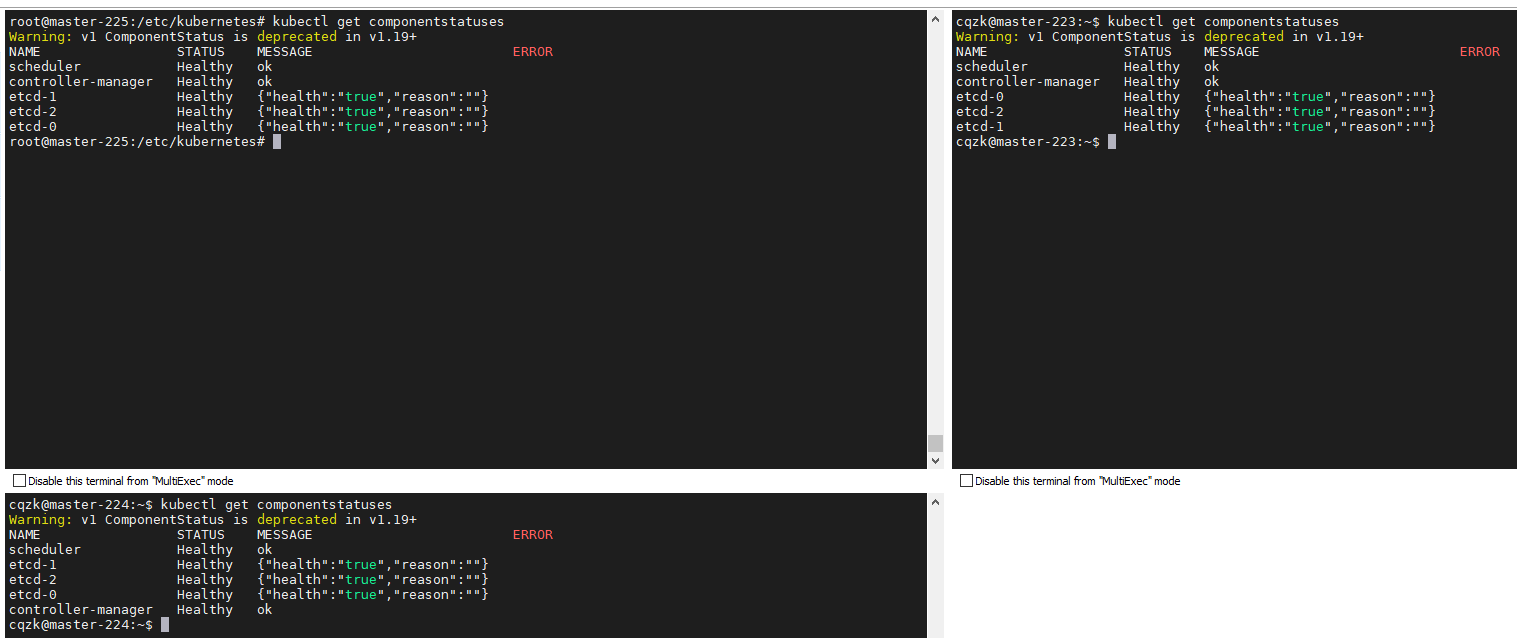

步骤 07.【所有master节点】验证所有master节点各个组件状态, 正常状态下如下, 如有错误请排查通过后在进行后面操作。1 2 3 4 5 6 7 8 kubectl get componentstatuses

weiyigeek.top-所有master节点组件状态

6) 部署配置 kubelet 步骤 01.【master-225】读取BOOTSTRAP_TOKE 并 创建 kubelet 的 kubeconfig 配置文件 kubelet.conf。1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 cd /etc/kubernetes/BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /etc/kubernetes/bootstrap-token.csv) kubectl config set -cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://weiyigeek.cluster.k8s:16443 --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig kubectl config set -credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig kubectl config set -context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig kubectl config use-context default --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig

步骤 02.完成后我们需要进行集群角色绑定。1 2 3 4 5 6 7 8 9 10 11 12 13 kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=kubelet-bootstrap kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig kubectl create clusterrolebinding create-csrs-for-bootstrapping --clusterrole=system:node-bootstrapper --group=system:bootstrappers kubectl create clusterrolebinding auto-approve-csrs-for-group --clusterrole=system:certificates.k8s.io:certificatesigningrequests:nodeclient --group=system:bootstrappers kubectl create clusterrolebinding auto-approve-renewals-for-nodes --clusterrole=system:certificates.k8s.io:certificatesigningrequests:selfnodeclient --group=system:bootstrappers

步骤 03.创建 kubelet 配置文件,配置参考地址(https://kubernetes.io/docs/reference/config-api/kubelet-config.v1beta1/) 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 cat > /etc/kubernetes/cfg/kubelet-config.yaml <<'EOF' apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration address: 0.0.0.0 port: 10250 readOnlyPort: 10255 authentication: anonymous: enabled: false webhook: cacheTTL: 2m0s enabled: true x509: clientCAFile: /etc/kubernetes/ssl/ca.pem authorization: mode: AlwaysAllow webhook: cacheAuthorizedTTL: 5m0s cacheUnauthorizedTTL: 30s cgroupDriver: systemd cgroupsPerQOS: true clusterDNS: - 10.96.0.10 clusterDomain: cluster.local containerLogMaxFiles: 5 containerLogMaxSize: 10Mi contentType: application/vnd.kubernetes.protobuf cpuCFSQuota: true cpuManagerPolicy: none cpuManagerReconcilePeriod: 10s enableControllerAttachDetach: true enableDebuggingHandlers: true enforceNodeAllocatable: - pods eventBurst: 10 eventRecordQPS: 5 evictionHard: imagefs.available: 15% memory.available: 100Mi nodefs.available: 10% nodefs.inodesFree: 5% evictionPressureTransitionPeriod: 5m0s failSwapOn: true fileCheckFrequency: 20s hairpinMode: promiscuous-bridge healthzBindAddress: 127.0.0.1 healthzPort: 10248 httpCheckFrequency: 20s imageGCHighThresholdPercent: 85 imageGCLowThresholdPercent: 80 imageMinimumGCAge: 2m0s iptablesDropBit: 15 iptablesMasqueradeBit: 14 kubeAPIBurst: 10 kubeAPIQPS: 5 makeIPTablesUtilChains: true maxOpenFiles: 1000000 maxPods: 110 nodeStatusUpdateFrequency: 10s oomScoreAdj: -999 podPidsLimit: -1 registryBurst: 10 registryPullQPS: 5 resolvConf: /etc/resolv.conf rotateCertificates: true runtimeRequestTimeout: 2m0s serializeImagePulls: true staticPodPath: /etc/kubernetes/manifests streamingConnectionIdleTimeout: 4h0m0s syncFrequency: 1m0s volumeStatsAggPeriod: 1m0s serverTLSBootstrap: true EOF cat > /etc/kubernetes/cfg/kubelet.json << "EOF" { "kind" : "KubeletConfiguration" , "apiVersion" : "kubelet.config.k8s.io/v1beta1" , "authentication" : { "x509" : { "clientCAFile" : "/etc/kubernetes/ssl/ca.pem" }, "webhook" : { "enabled" : true , "cacheTTL" : "2m0s" }, "anonymous" : { "enabled" : false } }, "authorization" : { "mode" : "Webhook" , "webhook" : { "cacheAuthorizedTTL" : "5m0s" , "cacheUnauthorizedTTL" : "30s" } }, "address" : "__IP__" , "port" : 10250, "readOnlyPort" : 10255, "cgroupDriver" : "systemd" , "hairpinMode" : "promiscuous-bridge" , "serializeImagePulls" : false , "clusterDomain" : "cluster.local." , "clusterDNS" : ["10.96.0.10" ] } EOF

温馨提示: 上述 kubelet.json 中 address 需要修改为当前主机IP地址, 例如在master-225主机中应该更改为 10.10.107.225 , 此处我设置为了 0.0.0.0 表示监听所有网卡,若有指定网卡的需求请指定其IP地址。

温馨提示: 使用serverTLSBootstrap: true启用 TLS Bootstrap 证书签发,在后续安装Metrics-server中不报错。

步骤 04.【master-225】创建 kubelet 服务启动管理文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 mkdir -vp /var/lib/kubelet cat > /lib/systemd/system/kubelet.service << "EOF" [Unit] Description=kubelet: The Kubernetes Node Agent Documentation=https://kubernetes.io/docs/home/ Wants=network-online.target After=network-online.target [Service] WorkingDirectory=/var/lib/kubelet ExecStart=/usr/local /bin/kubelet \ --bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \ --config=/etc/kubernetes/cfg/kubelet-config.yaml \ --cert-dir=/etc/kubernetes/ssl \ --kubeconfig=/etc/kubernetes/kubelet.conf \ --network-plugin=cni \ --root-dir=/etc/cni/net.d \ --cni-bin-dir=/opt/cni/bin \ --cni-conf-dir=/etc/cni/net.d \ --container-runtime=remote \ --container-runtime-endpoint=unix:///run/containerd/containerd.sock \ --rotate-certificates \ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 \ --image-pull-progress-deadline=15m \ --alsologtostderr=true \ --logtostderr=false \ --log -dir=/var/log /kubernetes \ --v=2 \ --node-labels=node.kubernetes.io/node='' StartLimitInterval=0 RestartSec=10 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

步骤 05.将master-255节点上的kubelet相关文件复制到所有节点之中。

1 2 3 4 5 6 7 8 9 10 11 scp -P 20211 /etc/kubernetes/kubelet-bootstrap.kubeconfig /etc/kubernetes/cfg/kubelet-config.yaml /lib/systemd/system/kubelet.service weiyigeek@master-223:/tmp scp -P 20211 /etc/kubernetes/kubelet-bootstrap.kubeconfig /etc/kubernetes/cfg/kubelet-config.yaml /lib/systemd/system/kubelet.service weiyigeek@master-224:/tmp scp -P 20211 /etc/kubernetes/kubelet-bootstrap.kubeconfig /etc/kubernetes/cfg/kubelet-config.yaml /lib/systemd/system/kubelet.service weiyigeek@node-1:/tmp scp -P 20211 /etc/kubernetes/kubelet-bootstrap.kubeconfig /etc/kubernetes/cfg/kubelet-config.yaml /lib/systemd/system/kubelet.service weiyigeek@node-2:/tmp sudo mkdir -vp /var/lib/kubelet /etc/kubernetes/cfg/ cp /tmp/kubelet-bootstrap.kubeconfig /etc/kubernetes/kubelet-bootstrap.kubeconfig cp /tmp/kubelet-config.yaml /etc/kubernetes/cfg/kubelet-config.yaml cp /tmp/kubelet.service /lib/systemd/system/kubelet.service

步骤 06.【所有节点】重载 systemd 以及自动启用 kube-scheduler 服务。1 2 3 4 systemctl daemon-reload systemctl enable --now kubelet.service systemctl status -l kubelet.service

步骤 07.利用kubectl工具查看集群中所有节点信息。

1 2 3 4 5 6 7 $ kubectl get node -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME master-223 NotReady <none> 17h v1.23.6 10.10.107.223 <none> Ubuntu 20.04.3 LTS 5.4.0-92-generic containerd://1.6.4 master-224 NotReady <none> 19s v1.23.6 10.10.107.224 <none> Ubuntu 20.04.3 LTS 5.4.0-92-generic containerd://1.6.4 master-225 NotReady <none> 3d22h v1.23.6 10.10.107.225 <none> Ubuntu 20.04.3 LTS 5.4.0-109-generic containerd://1.6.4 node-1 NotReady <none> 17h v1.23.6 10.10.107.226 <none> Ubuntu 20.04.2 LTS 5.4.0-96-generic containerd://1.6.4 node-2 NotReady <none> 17h v1.23.6 10.10.107.227 <none> Ubuntu 20.04.2 LTS 5.4.0-96-generic containerd://1.6.4

温馨提示: 由上述结果可知各个节点 STATUS 处于 NotReady , 这是由于节点之间的POD无法通信还缺少网络插件,当我们安装好 kube-proxy 与 calico 就可以变成 Ready 状态了。

步骤 08.确认kubelet服务启动成功后,我们可以执行如下命令查看kubelet-bootstrap申请颁发的证书, 如果CONDITION不为Approved,Issued则需要进行排查是否有错误。

1 2 3 4 5 6 $ kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION csr-b949p 7m55s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued csr-c9hs4 3m34s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued csr-r8vhp 5m50s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued csr-zb4sr 3m40s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

7) 部署配置 kube-proxy 描述: 在集群中kube-proxy组件, 其负责节点上的网络规则使得您可以在集群内、集群外正确地与 Pod 进行网络通信, 同时它也是负载均衡中最重要的点,进行流量转发

步骤 01.【master-225】创建 kube-proxy 证书请求文件CSR并生成证书1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 tee proxy-csr.json <<'EOF' { "CN" : "system:kube-proxy" , "hosts" : [], "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [ { "C" : "CN" , "L" : "ChongQing" , "ST" : "ChongQing" , "O" : "system:kube-proxy" , "OU" : "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes proxy-csr.json | cfssljson -bare proxy $ ls proxy* proxy.csr proxy-csr.json proxy-key.pem proxy.pem cp proxy*.pem /etc/kubernetes/ssl

步骤 02.完成后我们需要创建 kube-scheduler 的 kubeconfig 配置文件。

1 2 3 4 5 6 7 8 9 10 11 12 13 cd /etc/kubernetes/kubectl config set -cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://10.10.107.222:16443 --kubeconfig=kube-proxy.kubeconfig kubectl config set -credentials kube-proxy --client-certificate=/etc/kubernetes/ssl/proxy.pem --client-key=/etc/kubernetes/ssl/proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig kubectl config set -context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

步骤 03.创建 kube-proxy 服务配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 cat > /etc/kubernetes/cfg/kube-proxy.yaml << "EOF" apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration bindAddress: 0.0.0.0 healthzBindAddress: 0.0.0.0:10256 metricsBindAddress: 0.0.0.0:10249 hostnameOverride: __HOSTNAME__ clusterCIDR: 10.128.0.0/16 clientConnection: kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig mode: ipvs ipvs: excludeCIDRs: - 10.128.0.1/32 EOF

温馨提示: ProxyMode 表示的是 Kubernetes 代理服务器所使用的模式.

目前在 Linux 平台上有三种可用的代理模式:'userspace'(相对较老,即将被淘汰)、 'iptables'(相对较新,速度较快)、'ipvs'(最新,在性能和可扩缩性上表现好)。

目前在 Windows 平台上有两种可用的代理模式:'userspace'(相对较老,但稳定)和 'kernelspace'(相对较新,速度更快)。

步骤 04.创建 kube-proxy.service 服务启动管理文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 mkdir -vp /var/lib/kube-proxy cat > /lib/systemd/system/kube-proxy.service << "EOF" [Unit] Description=Kubernetes Kube-proxy Server Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] WorkingDirectory=/var/lib/kube-proxy ExecStart=/usr/local /bin/kube-proxy \ --config=/etc/kubernetes/cfg/kube-proxy.yaml \ --alsologtostderr=true \ --logtostderr=false \ --log -dir=/var/log /kubernetes \ --v=2 Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

步骤 05.同步【master-225】节点中如下文件到其它节点对应目录之上。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 scp -P 20211 /etc/kubernetes/kube-proxy.kubeconfig /etc/kubernetes/ssl/proxy.pem /etc/kubernetes/ssl/proxy-key.pem /etc/kubernetes/cfg/kube-proxy.yaml /lib/systemd/system/kube-proxy.service weiyigeek@master-223:/tmp scp -P 20211 /etc/kubernetes/kube-proxy.kubeconfig /etc/kubernetes/ssl/proxy.pem /etc/kubernetes/ssl/proxy-key.pem /etc/kubernetes/cfg/kube-proxy.yaml /lib/systemd/system/kube-proxy.service weiyigeek@master-224:/tmp scp -P 20211 /etc/kubernetes/kube-proxy.kubeconfig /etc/kubernetes/ssl/proxy.pem /etc/kubernetes/ssl/proxy-key.pem /etc/kubernetes/cfg/kube-proxy.yaml /lib/systemd/system/kube-proxy.service weiyigeek@node-1:/tmp scp -P 20211 /etc/kubernetes/kube-proxy.kubeconfig /etc/kubernetes/ssl/proxy.pem /etc/kubernetes/ssl/proxy-key.pem /etc/kubernetes/cfg/kube-proxy.yaml /lib/systemd/system/kube-proxy.service weiyigeek@node-2:/tmp cd /tmpcp kube-proxy.kubeconfig /etc/kubernetes/kube-proxy.kubeconfig cp proxy*.pem /etc/kubernetes/ssl/ cp kube-proxy.yaml /etc/kubernetes/cfg/kube-proxy.yaml cp kube-proxy.service /lib/systemd/system/kube-proxy.service mkdir -vp /var/lib/kube-proxy sed -i "s#__HOSTNAME__#$(hostname) #g" /etc/kubernetes/cfg/kube-proxy.yaml

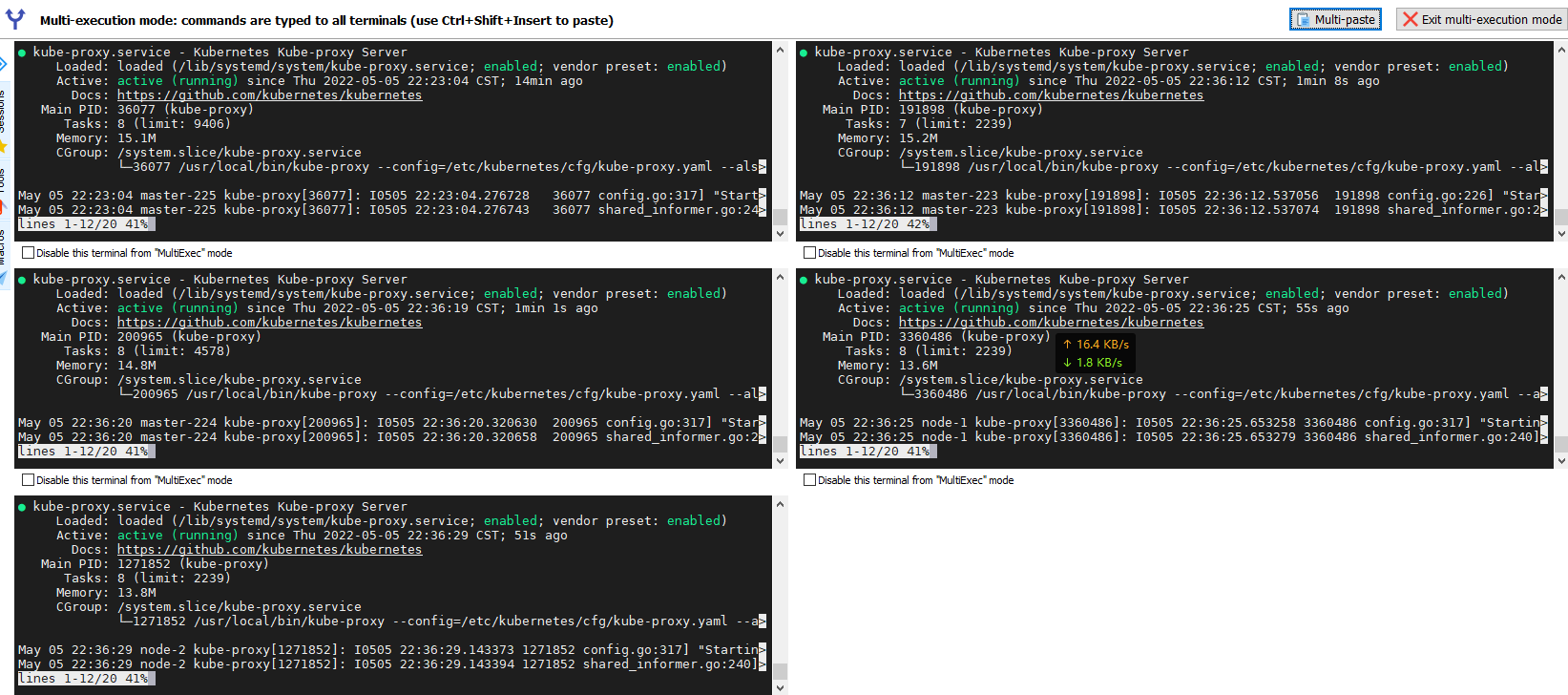

步骤 06.同样重载systemd服务与设置kube-proxy服务自启,启动后查看其服务状态1 2 3 4 systemctl daemon-reload systemctl enable --now kube-proxy systemctl status kube-proxy

weiyigeek.top-kube-proxy服务状态查看

8) 部署配置 Calico 插件 描述: 前面在节点加入到集群时,你会发现其节点状态为 NotReady , 我们说过部署calico插件可以让Pod与集群正常通信。

步骤 01.在【master-225】节点上拉取最新版本的 calico 当前最新版本为 v3.22, 官方项目地址 (https://github.com/projectcalico/calico )

1 2 wget https://docs.projectcalico.org/v3.22/manifests/calico.yaml

步骤 02.修改 calico.yaml 文件的中如下 K/V, 即 Pod 获取IP地址的地址池, 从网络规划中我们设置为 10.128.0.0/16, 注意默认情况下如下字段是注释的且默认地址池为192.168.0.0/16。1 2 3 4 5 6 $ vim calico.yaml - name: CALICO_IPV4POOL_CIDR value: "10.128.0.0/16"

步骤 03.部署 calico 到集群之中。1 2 3 4 kubectl apply -f calico.yaml

步骤 04.查看calico网络插件在各节点上部署结果,状态为Running表示部署成功。1 2 3 4 5 6 7 8 $ kubectl get pod -A -o wide

步骤 05.查看集群中各个节点状态是否Ready,状态为Running表示节点正常。

步骤 06.此处我们扩展一哈,您可能会发现在二进制安装Kubernetes集群时master节点ROLES为<none>,而不是我们常见的master名称, 并且由于 K8s 1.24版本以后kubeadm安装Kubernetes集群时,不再给运行控制面组件的节点标记为“master”,只是因为这个词被认为是“具有攻击性的、无礼的(offensive)”。近几年一些用master-slave来表示主-从节点的计算机系统纷纷改掉术语,slave前两年就已经销声匿迹了,现在看来master也不能用, 但我们可以通过如下方式进行自定义设置节点角色名称。1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 kubectl label nodes master-223 node-role.kubernetes.io/control-plane= kubectl label nodes master-224 node-role.kubernetes.io/control-plane= kubectl label nodes master-225 node-role.kubernetes.io/control-plane= kubectl label nodes node-1 node-role.kubernetes.io/work= kubectl label nodes node-2 node-role.kubernetes.io/work= node/node-2 labeled $ kubectl get node NAME STATUS ROLES AGE VERSION master-223 Ready control-plane 3d v1.23.6 master-224 Ready control-plane 2d6h v1.23.6 master-225 Ready control-plane 6d5h v1.23.6 node-1 Ready work 3d v1.23.6 node-2 Ready work 3d v1.23.6

9) 部署配置 CoreDNS 插件 描述: 通过该CoreDNS插件从名称可以看出其用于 DNS和服务发现, 使得集群中可以使用服务名称进行访问相应的后端Pod,不管后端Pod地址如何改变其总是第一时间会更新绑定到对应服务域名, 该项目也是毕业于CNCF。

官网地址: https://coredns.io/ https://github.com/coredns/coredns

步骤 01.通过参考Github(https://github.com/coredns/deployment/tree/master/kubernetes)中coredns项目在K8S部署说明,我们需要下载 deploy.sh 与 coredns.yaml.sed 两个文件.

1 2 3 wget -L https://github.com/coredns/deployment/raw/master/kubernetes/deploy.sh wget -L https://github.com/coredns/deployment/raw/master/kubernetes/coredns.yaml.sed chmox +x deploy.sh

步骤 02.生成coredns部署清单yaml文件1 2 3 ./deploy.sh -i 10.96.0.10 >> coredns.yaml

温馨提示: 实际上我们可以手动替换 coredns.yaml.sed 内容。1 2 3 4 5 6 7 8 9 10 11 12 13 CLUSTER_DNS_IP=10.96.0.10 CLUSTER_DOMAIN=cluster.local REVERSE_CIDRS=in -addr.arpa ip6.arpa STUBDOMAINS="" UPSTREAMNAMESERVER=UPSTREAMNAMESERVER YAML_TEMPLATE=coredns.yaml.sed sed -e "s/CLUSTER_DNS_IP/$CLUSTER_DNS_IP /g" \ -e "s/CLUSTER_DOMAIN/$CLUSTER_DOMAIN /g" \ -e "s?REVERSE_CIDRS?$REVERSE_CIDRS ?g" \ -e "s@STUBDOMAINS@${STUBDOMAINS//$orig/$replace} @g" \ -e "s/UPSTREAMNAMESERVER/$UPSTREAM /g" \ "${YAML_TEMPLATE} "

步骤 03.查看生成的coredns部署清单yaml文件(coredns.yml)1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 apiVersion: v1 kind: ServiceAccount metadata: name: coredns namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns rules: - apiGroups: - "" resources: - endpoints - services - pods - namespaces verbs: - list - watch - apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:coredns subjects: - kind: ServiceAccount name: coredns namespace: kube-system --- apiVersion: v1 kind: ConfigMap metadata: name: coredns namespace: kube-system data: Corefile: | .:53 { errors health { lameduck 5s } ready kubernetes cluster.local in-addr.arpa ip6.arpa { fallthrough in-addr.arpa ip6.arpa } prometheus :9153 forward . /etc/resolv.conf { max_concurrent 1000 } cache 30 loop reload loadbalance } --- apiVersion: apps/v1 kind: Deployment metadata: name: coredns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/name: "CoreDNS" spec: strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 selector: matchLabels: k8s-app: kube-dns template: metadata: labels: k8s-app: kube-dns spec: priorityClassName: system-cluster-critical serviceAccountName: coredns tolerations: - key: "CriticalAddonsOnly" operator: "Exists" nodeSelector: kubernetes.io/os: linux affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: k8s-app operator: In values: ["kube-dns"] topologyKey: kubernetes.io/hostname containers: - name: coredns image: coredns/coredns:1.9.1 imagePullPolicy: IfNotPresent resources: limits: memory: 170 Mi requests: cpu: 100 m memory: 70 Mi args: [ "-conf" , "/etc/coredns/Corefile" ] volumeMounts: - name: config-volume mountPath: /etc/coredns readOnly: true ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP - containerPort: 9153 name: metrics protocol: TCP securityContext: allowPrivilegeEscalation: false capabilities: add: - NET_BIND_SERVICE drop: - all readOnlyRootFilesystem: true livenessProbe: httpGet: path: /health port: 8080 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: httpGet: path: /ready port: 8181 scheme: HTTP dnsPolicy: Default volumes: - name: config-volume configMap: name: coredns items: - key: Corefile path: Corefile --- apiVersion: v1 kind: Service metadata: name: kube-dns namespace: kube-system annotations: prometheus.io/port: "9153" prometheus.io/scrape: "true" labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" kubernetes.io/name: "CoreDNS" spec: selector: k8s-app: kube-dns clusterIP: 10.96 .0 .10 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 protocol: TCP - name: metrics port: 9153 protocol: TCP

步骤 04.在集群中部署coredns,并查看其部署状态。1 2 3 4 5 6 7 8 9 10 kubectl apply -f coredns.yaml ctr -n k8s.io i pull docker.io/coredns/coredns:1.9.1

0x03 应用部署测试 1.部署Nginx Web服务 方式1.利用create快速部署由deployment管理的nginx应用 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 $ kubectl create deployment --image=nginx:latest --port=5701 --replicas 1 hello-nginx deployment.apps/hello-nginx created $ kubectl get pod -o wide --show-labels NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS hello-nginx-7f4ff84cb-mjw79 1/1 Running 0 65s 10.128.17.203 master-225 <none> <none> app=hello-nginx,pod-template-hash=7f4ff84cb $ kubectl expose deployment hello-nginx --port=80 --target-port=80 service/hello-nginx exposed $ kubectl get svc hello-nginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE hello-nginx ClusterIP 10.96.122.135 <none> 80/TCP 23s $ curl -I 10.96.122.135 HTTP/1.1 200 OK Server: nginx/1.21.5 Date: Sun, 08 May 2022 09:58:27 GMT Content-Type: text/html Content-Length: 615 Last-Modified: Tue, 28 Dec 2021 15:28:38 GMT Connection: keep-alive ETag: "61cb2d26-267" Accept-Ranges: bytes

方式2.当我们也可以利用资源清单创建nginx服务用于部署我的个人主页 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 tee nginx.yaml <<'EOF' apiVersion: apps/v1 kind: StatefulSet metadata: name: nginx-web namespace: default labels: app: nginx spec: replicas: 2 selector: matchLabels: app: nginx serviceName: "nginx-service" template: metadata: labels: app: nginx spec: volumes: - name: workdir emptyDir: {} containers: - name: nginx image: nginx:latest ports: - name: http protocol: TCP containerPort: 80 volumeMounts: - name: workdir mountPath: "/usr/share/nginx/html" initContainers: - name: init-html image: bitnami/git:2.36.1 command : ['sh' , '-c' , "git clone --depth=1 https://github.com/WeiyiGeek/WeiyiGeek.git /app/html" ] volumeMounts: - name: workdir mountPath: "/app/html" --- apiVersion: v1 kind: Service metadata: name: nginx-service spec: type : NodePort ports: - port: 80 targetPort: 80 nodePort: 30001 protocol: TCP selector: app: nginx EOF

步骤 02.部署资源以及Pod信息查看1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 $ kubectl apply -f nginx.yaml statefulset.apps/nginx-web created service/nginx-service created $ kubectl get pod nginx-web-0 NAME READY STATUS RESTARTS AGE nginx-web-0 0/1 Init:0/1 0 3m19s $ kubectl get pod nginx-web-0 -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-web-0 1/1 Running 0 12m 10.128.251.72 master-224 <none> <none> $ kubectl describe svc nginx-service Name: nginx-service Namespace: default Labels: <none> Annotations: <none> Selector: app=nginx Type: NodePort IP Family Policy: SingleStack IP Families: IPv4 IP: 10.96.14.218 IPs: 10.96.14.218 Port: <unset > 80/TCP TargetPort: 80/TCP NodePort: <unset > 30001/TCP Endpoints: 10.128.251.72:80 Session Affinity: None External Traffic Policy: Cluster Events: <none>

步骤 03.通过客户端浏览器访问10.10.107.225:30001即可访问我们部署的nginx应用,同时通过kubectl logs -f nginx-web-0查看pod日志.

weiyigeek.top-部署的个人主页

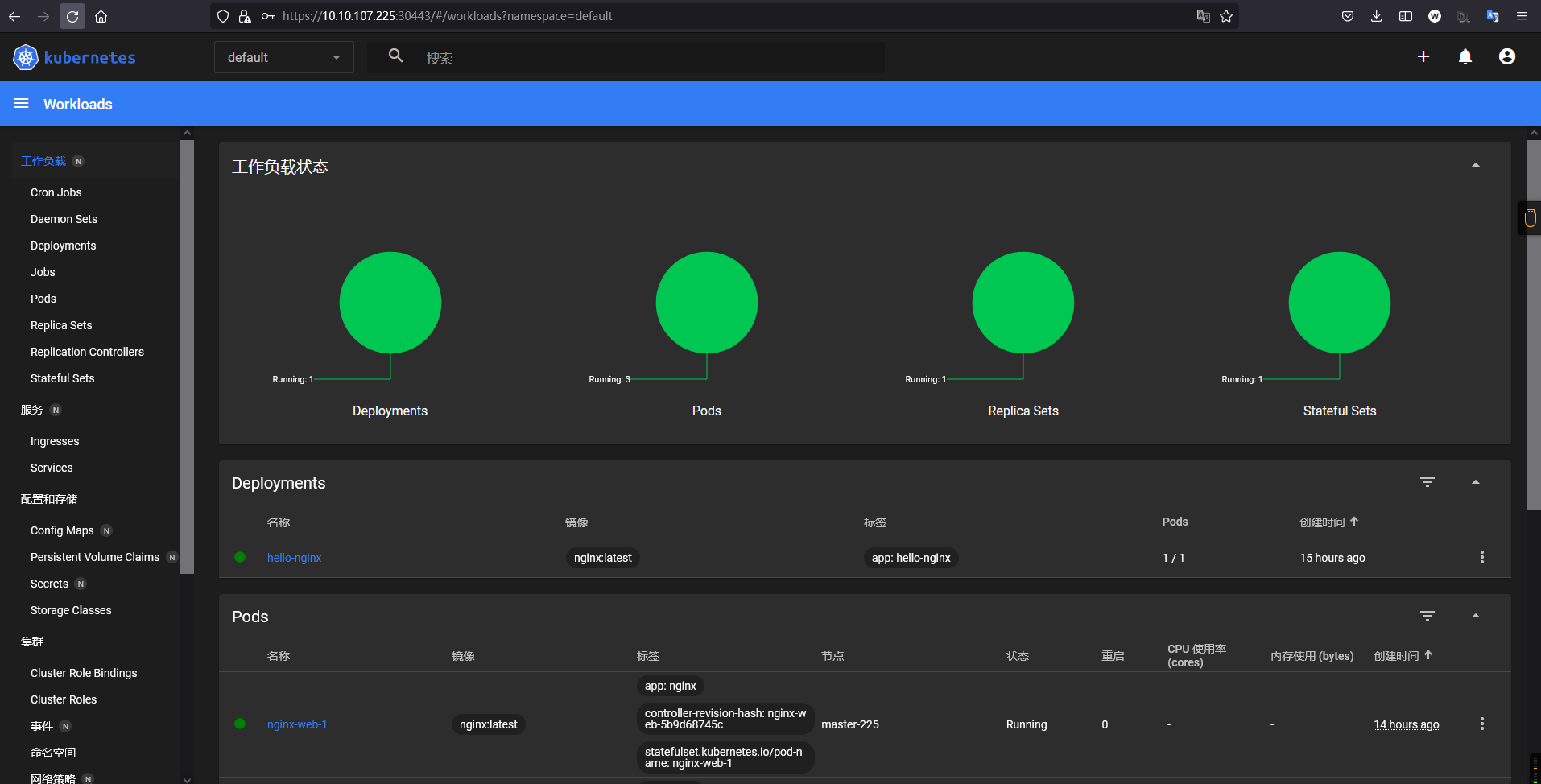

2.部署K8s原生Dashboard UI 描述:Kubernetes Dashboard是Kubernetes集群的通用、基于web的UI。它允许用户管理集群中运行的应用程序并对其进行故障排除,以及管理集群本身。

项目地址: https://github.com/kubernetes/dashboard/

步骤 01.从Github中拉取dashboard部署资源清单,当前最新版本v2.5.11 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 wget -L https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.1/aio/deploy/recommended.yaml kubectl apply -f recommended.yaml grep "image:" recommended.yaml kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.1/aio/deploy/recommended.yaml

步骤 02.查看部署的dashboard相关资源是否正常。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 $ kubectl get deploy,svc -n kubernetes-dashboard -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR deployment.apps/dashboard-metrics-scraper 1/1 1 1 7m45s dashboard-metrics-scraper kubernetesui/metrics-scraper:v1.0.7 k8s-app=dashboard-metrics-scraper deployment.apps/kubernetes-dashboard 1/1 1 1 7m45s kubernetes-dashboard kubernetesui/dashboard:v2.5.1 k8s-app=kubernetes-dashboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR service/dashboard-metrics-scraper ClusterIP 10.96.37.134 <none> 8000/TCP 7m45s k8s-app=dashboard-metrics-scraper service/kubernetes-dashboard ClusterIP 10.96.26.57 <none> 443/TCP 7m45s k8s-app=kubernetes-dashboard $ kubectl edit svc -n kubernetes-dashboard kubernetes-dashboard apiVersion: v1 kind: Service ..... spec: ..... ports: - port: 443 protocol: TCP targetPort: 8443 nodePort: 30443 selector: k8s-app: kubernetes-dashboard sessionAffinity: None type : NodePort

步骤 03.默认仪表板部署包含运行所需的最小RBAC权限集,而要想使用dashboard操作集群中的资源,通常我们还需要自定义创建kubernetes-dashboard管理员角色。https://github.com/kubernetes/dashboard/blob/master/docs/user/access-control/README.md

1 2 3 kubectl get sa -n kubernetes-dashboard kubernetes-dashboard kubectl describe secrets -n kubernetes-dashboard kubernetes-dashboard-token-jhdpb | grep '^token:' |awk '{print $2}'

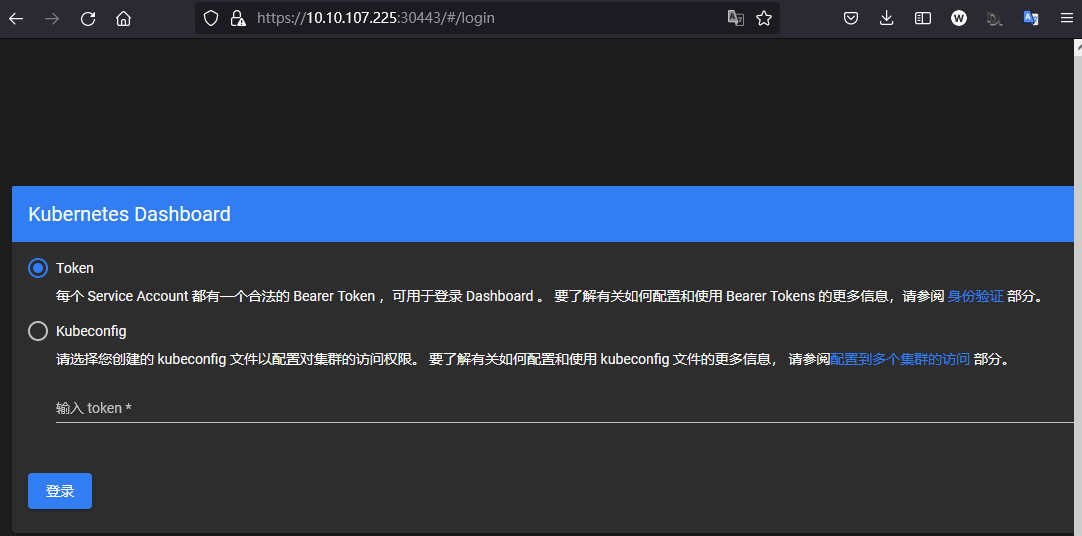

weiyigeek.top-Dashboard默认两种认证方式

Kubernetes Dashboard 支持几种不同的用户身份验证方式:

Authorization header

Bearer Token (默认)

Username/password

Kubeconfig file (默认)

温馨提示: 此处使用Bearer Token方式, 为了方便演示我们向 Dashboard 的服务帐户授予管理员权限 (Admin privileges), 而在生产环境中通常不建议如此操作, 而是指定一个或者多个名称空间下的资源进行操作。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 tee rbac-dashboard-admin.yaml <<'EOF' apiVersion: v1 kind: ServiceAccount metadata: name: dashboard-admin namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: dashboard-admin namespace: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: dashboard-admin namespace: kubernetes-dashboard EOF kubectl apply -f rbac-dashboard-admin.yaml

步骤 04.获取 sa 创建的 dashboard-admin 用户的 secrets 名称并获取认证 token ,用于上述搭建的dashboard 认证使用。1 2 3 4 5 kubectl get sa -n kubernetes-dashboard dashboard-admin -o yaml | grep "\- name" | awk '{print $3}' kubectl describe secrets -n kubernetes-dashboard dashboard-admin-token-crh7v | grep "^token:" | awk '{print $2}' eyJhbGciOiJSUzI1NiIsImtpZCI6IkJXdm1YSGNSQ3VFSEU3V0FTRlJKcU10bWxzUDZPY3lfU0lJOGJjNGgzRXMifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tY3JoN3YiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNDY3ODEwMDMtM2MzNi00NWE1LTliYTQtNDY3MTQ0OWE2N2E0Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.X10AzWBxaHObYGoOqjfw3IYkhn8L5E7najdGSeLavb94LX5BY8_rCGizkWgNgNyvUe39NRP8r8YBU5sy9F2K-kN9_5cxUX125cj1drLDmgPJ-L-1m9-fs-luKnkDLRE5ENS_dgv7xsFfhtN7s9prgdqLw8dIrhshHVwflM_VOXW5D26QR6izy2AgPNGz9cRh6x2znrD-dpUNHO1enzvGzlWj7YhaOUFl310V93hh6EEc57gAwmDQM4nWP44KiaAiaW1cnC38Xs9CbWYxjsfxd3lObWShOd3knFk5PUVSBHo0opEv3HQ_-gwu6NGV6pLMY52p_JO1ECPSDnblVbVtPQ

步骤 05.利用上述 Token 进行登陆Kubernetes-dashboard的UI。

weiyigeek.top-拥有管理员权限的dashboard

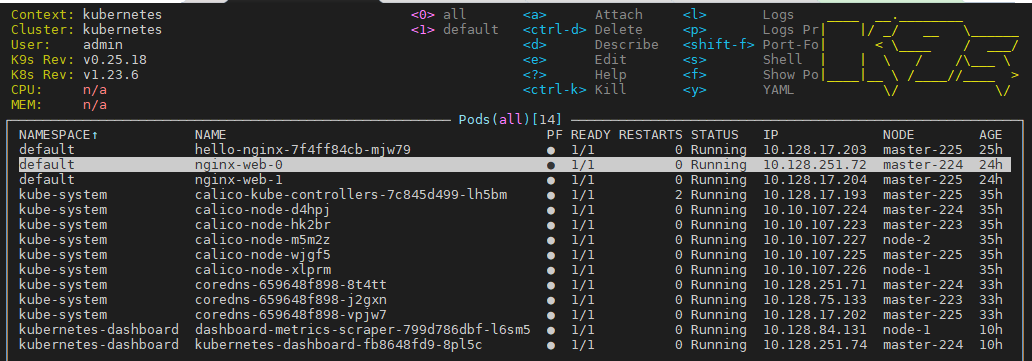

3.部署K9s工具进行管理集群 描述: k9s 是用于管理 Kubernetes 集群的 CLI, K9s 提供了一个终端 UI 来与您的 Kubernetes 集群进行交互。通过封装 kubectl 功能 k9s 持续监视 Kubernetes 的变化并提供后续命令来与您观察到的资源进行交互,直白的说就是k9s可以让开发者快速查看并解决运行 Kubernetes 时的日常问题。https://k9scli.io/ https://github.com/derailed/k9s

此处,以安装二进制包为例进行实践。1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 wget -b -c https://github.com/derailed/k9s/releases/download/v0.25.18/k9s_Linux_x86_64.tar.gz tar -zxf k9s_linux_x86_64.tar.gz rm k9s_linux_x86_64.tar.gz LICENSE README.md mkdir -p $HOME /.kube sudo cp -i /etc/kubernetes/admin.conf $HOME /.kube/config sudo chown $(id -u):$(id -g) $HOME /.kube/config export KUBECONFIG=/etc/kubernetes/admin.conf/nfsdisk-31/newK8s-Backup/tools :quit

weiyigeek.top-使用K9s工具管理集群

更多使用技巧请参考: [K9s之K8s集群管理工具实践尝试] (https://blog.weiyigeek.top/2022/1-1-582.html )

0x04 入坑出坑 在二进制安装配置containerd自启时报Unit file /etc/systemd/system/containerd.service is masked.错误解决

错误信息: Failed to enable unit: Unit file /etc/systemd/system/containerd.service is masked.

错误原因: 在二进制安装前通过yum或者apt软件管理器进行安装该 containerd.service 服务

解决办法: 1 2 3 4 5 6 systemctl unmask containerd systemctl daemon-reload && systemctl enable --now containerd.service

二进制搭建K8S集群部署calico网络插件始终有一个calico-node-xxx状态为Running但是READY为0/1解决方法

错误信息:

1 2 3 4 5 6 7 8 kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-6f7b5668f7-52247 1/1 Running 0 13m kube-system calico-node-kb27z 0/1 Running 0 13m $ kubectl describe pod -n kube-system calico-node-kb27z Warning Unhealthy 14m kubelet Readiness probe failed: 2022-05-07 13:15:12.460 [INFO][204] confd/health.go 180: Number of node(s) with BGP peering established = 0 calico/node is not ready: BIRD is not ready: BGP not established with 10.10.107.223,10.10.107.224,10.10.107.225,10.10.107.226

错误原因: calico-node daemonset 默认的策略是获取第一个取到的网卡的 ip 作为 calico node 的ip, 由于集群中网卡名称不统一所以可能导致calico获取的网卡IP不对, 所以出现此种情况下就只能 IP_AUTODETECTION_METHOD 字段指定通配符网卡名称或者IP地址。

解决办法:

1 2 3 4 5 6 7 8 9 10 11 12 ip addr kubectl edit daemonsets.apps -n kube-system calico-node - name: IP value: autodetect - name: IP_AUTODETECTION_METHOD value: interface=en.*

扩展补充: calico 官方文档 https://docs.projectcalico.org/reference/node/configuration#ip-autodetection-methods

1 2 3 4 5 - name: IP_AUTODETECTION_METHOD value: can-reach=114.114.114.114

二进制部署 containerd 1.6.3 将用于环回的 CNI 配置版本提高到 1.0.0 导致 CNI loopback 无法启用

1 2 containerd -version containerd github.com/containerd/containerd v1.6.4 212e8b6fa2f44b9c21b2798135fc6fb7c53efc16

在集群中部署coredns时显示 CrashLoopBackOff 并且报 Readiness probe failed 8181 端口 connection refused

错误信息:

1 2 3 4 5 6 kubectl get pod -n kube-system -o wide kubectl describe pod -n kube-system coredns-659648f898-crttw

问题原因: 由于 coredns 是映射宿主机中的 /etc/resolv.conf 到容器中在加载该配置是并不能访问 nameserver 127.0.0.53 这个dns地址导致coredns容器pod无法正常启动,并且在我们手动修改该 /etc/resolv.conf 后systemd-resolved.service会定时刷新覆盖我们的修改,所以在百度上的一些方法只能临时解决,在Pod容器下一次重启后将会又处于该异常状态。

1 2 3 4 5 6 $ ls -alh /etc/resolv.conf $ cat /etc/resolv.conf

解决办法: 推荐方式将/etc/resolv.conf建立软连接到 /run/systemd/resolve/resolv.conf或者不使用软连接直接删除。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 tee -a /etc/systemd/resolved.conf <<'EOF' DNS=223.6.6.6 DNS=10.96.0.10 EOF systemctl restart systemd-resolved.service systemd-resolve --status sudo rm -f /etc/resolv.conf ln -s /run/systemd/resolve/resolv.conf /etc/resolv.conf kubectl delete pod -n kube-system -l k8s-app=kube-dns kubectl scale deployment -n kube-system coredns --replicas 3 $ cat /etc/resolv.conf nameserver 223.6.6.6 nameserver 10.96.0.10 nameserver 192.168.10.254

在进行授予管理员权限时利用 Token 登陆 kubernetes-dashboard 认证时始终报 Unauthorized (401): Invalid credentials provided

错误信息: Unauthorized (401): Invalid credentials provided1 2 3 $ kubectl logs -f --tail 50 -n kubernetes-dashboard kubernetes-dashboard-fb8648fd9-8pl5c 2022/05/09 00:48:50 [2022-05-09T00:48:50Z] Incoming HTTP/2.0 POST /api/v1/login request from 10.128.17.194:54676: { contents hidden } 2022/05/09 00:48:50 Non-critical error occurred during resource retrieval: the server has asked for the client to provide credentials

问题原因: 创键的rbac本身是没有问题,只是我们采用 kubectl get secrets 获取的是经过base64编码后的,我们应该执行 kubectl describe secrets 进行获取。

解决办法:1 2 3 kubectl get sa -n kubernetes-dashboard dashboard-admin -o yaml | grep "\- name" | awk '{print $3}' kubectl describe secrets -n kubernetes-dashboard dashboard-admin-token-crh7v | grep "^token:" | awk '{print $2}'